Introduction

The process of improving an image and extracting relevant information from it is known as image processing. Image processing is becoming more important in a wide range of applications. Image processing is widely employed in a variety of industries, including filmmaking, diagnostic devices, manufacturing industries, and weather prediction, among others. The photos in some of these locations are very huge, but the processing time must be very short, and real-time processing is occasionally required. Any high-performance computing model must include parallel processing. It entails the use of a huge number of computational resources to finish a difficult task or solve a problem1

The CPU and RAM are the resources that are specific to parallel processing. Image processing using distributed programming is a new technique to handle image processing problems that need long

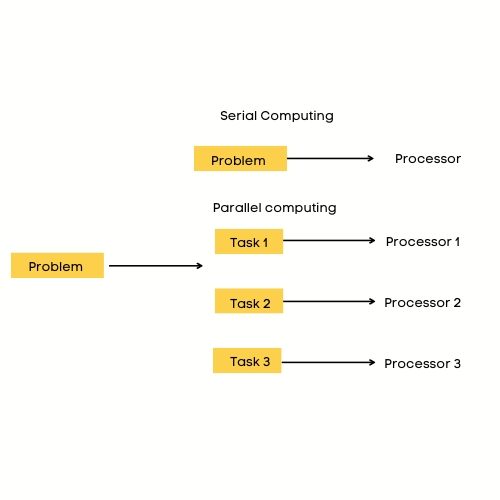

processing times or the handling of enormous amounts of data in an "acceptable" amount of time. The primary principle behind parallel image recognition is to break down an issue into little tasks and address them all at the same time, dividing the overall time spent on each task (in the best case). Parallel image processing cannot be used to solve all problems; in other words, not all issues can be programmed in a parallel manner2.

Fig1. Serial versus parallel computing3

Features Of A Parallel Program

A parallel programme must have certain characteristics in order to operate correctly and efficiently; otherwise, the runtime or operation may not function as planned. The following are some of these characteristics:

- a) Granularity: It is explained as the number of units and it is differentiated as:- Coarse-grained: There are few tasks that require more intensive computing. Fine grain: There are a lot of little components, and the computing isn't as demanding.

- b) Synchronization: It prevents two or more processes from overlapping.

- c) Latency: This is the period of time between receiving a request and receiving the information.

- d) Scalability: It's described as an algorithm's capacity to retain its efficiency when the processing power and the complexity of the problem grow in lockstep4.

- e) Speedup and efficiency: These are metrics for evaluating the parallel implementation quality.

- f) Overheads: The computation will take some more time. Requirements for improved parallel execution performance. Computer systems/servers with many processors integrated and improved message transmission between processors.

Parallel processing improves throughput by allowing tasks to be delivered faster. In a time-tested system, a large number of jobs could be completed.

Types Of Parallel Processing

Pipeline parallelism:

Longer sequences of protocols, or tasks, are paralleled in this type of processing, but there are also overlapping consecutive processes throughout, allowing for a large number of simultaneous tasks. The relational paradigm fits in perfectly with that model. Some relational operators' output becomes the input for other operators, resulting in certain waiting period. Through the proper application of directional parallelism, a significant amount of time can be saved when completing a task5 .

Independent or Natural Parallelism:

The processes in this type of parallelism are independent of one another. As a result, the total delivery time is significantly shortened.

Inter- query and intra-query parallelism:

Transactions are self-contained. There is no transaction that will result in the completion of another transaction. By allocating each task or concern to a different CPU, a large number of CPUs may be kept active. Inter-query parallelism is a type of parallelism that uses several broken up, independent queries at the same time. That model decomposes a large and difficult issue into smaller difficulties in order to accelerate delivery6 . It then runs these smaller jobs in parallel by allocating them to different CPUs. For DSS-type operations, where a complete transaction examines, computes, and revises thousands of repository blocks, this form of parallelism is a natural choice.

Task parallelism

Image processing suggestions low level methods are grouped into tasks in the job parallel strategy, and each task is assigned to a different research unit. Picture processing software entails a number of different activities. Effective knowledge breakdown and influence composition are the most important considerations in a job parallel strategy7 .

Conclusion

Thus, data, task, and pipeline parallelism which are used in image processing supports to give more efficient results. Several image processing techniques, such as edge detection, histogram equalisation, noise removal, image registration, picture segmentation, feature extraction, and many optimization strategies, all benefit from parallel computing8 .

List of Universities We Serve

Indian Institute of Science

Indian Institute of Science University of Delhi

University of Delhi Jawaharlal Nehru University

Jawaharlal Nehru University University of Hyderabad

University of Hyderabad IIT Kharagpur

IIT Kharagpur

About Us

Tutors India, is world’s reputed academic guid- ance provider for the past 15 years have guided more than 4,500 Ph.D. scholars and 10,500 Masters Students across the globe. We support students, research scholars, entrepreneurs, and professionals from various organizations in providing consistently high-quality writing and data analytical services every time. We value every client and make sure their requirements are identified and understood by our special- ized professionals and analysts, enriched in experience to deliver technically sound output within the requested timeframe. Writers at Tutors India are best referred as 'Researchers' since every topic they handle unique and challenging.

We specialize in handling text and data, i.e., content development and Statistical analysis where the latest statistical applications are exhausted by our expert analysts for determining the outcome of the data analysed. Qualified and experienced researchers including Ph.D. holders, statisticians, and research analysts offer cutting edge research consulting and writing services to meet your business information or academic project requirement. Our expertise has passion towards research and personal assistance as we work closely with you for a very professional and quality output within your stipulated time frame. Our services cover vast areas, and we also support either part or entire research paper/ser- vice as per your requirement at competitive price

References

- https://sci-hub.se/10.1016/j.knosys.2018.10.025

- https://sci-hub.se/10.1016/j.ins.2019.02.049

- https://www.teldat.com/blog/en/parallel-computing-bitinstruction-task-level-parallelism-multicore-computers/

- https://sci-hub.se/10.1007/s41965-018-00002-x

- https://sci-hub.se/10.1088/1742-6596/803/1/012152

- https://sci-hub.se/10.19026/rjaset.12.2324

- https://sci-hub.se/10.1109/sceecs.2016.7509316

- https://sci-hub.se/10.1155/2017/5767521